XR Introduction

Introduce VR, AR.

Virtual Reality

Overview

Virtual Reality (VR) is the technology to bring virtual digital content to users. It has the following 3 features.

- Immersion: several feedback to users

- Interaction: support several natural real-time interaction between users and virtual content

- Imagination: immersive, real

VR includes 3 parts: users, computing machine containing the virtual content, human-machine interfaces.

Virtual Content Creation

2 Ways to create the virtual content

Based on 3D geometric model

- Is like the computer games content development

- Related to Computer Graphics Subject

- Modeling

- indicate the objects with math model

- Rendering

- determine the color of each pixel according to the model, light, shader, texture and etc.

- requires real-time (at least 30 frames/s)

- Animation

- calculate each point’s motion in the math model

- Modeling

- Commercial tools

- Content Creation

- 3DS Max, Maya, PhotoShop

- Basic Graphics Library

- OpenGL, D3D

- Graphics System Development Library (3D Graphics Engines)

- OSG (Open Scene Graph), OGRE (Object-oriented Graphic Rendering Engine)

- Based on programming totally

- Open-source

- OSG (Open Scene Graph), OGRE (Object-oriented Graphic Rendering Engine)

- VR Engines

- Vega, World Tool Kit, VRPlatform, Virtools

- Professional VR Engine

- Can meet the accuracy requirements of real environment data

- Can build large scale environment map

- Unity3D, Unreal

- Game engines

- Vega, World Tool Kit, VRPlatform, Virtools

- Web Standard Language

- VRML (Virtual Reality Modeling Language)/X3D, WebGL/Html5

- Content Creation

Based on real images

- Just use real images to create the virtual environment.

Image->Object->Image - Is related to Computer Vision (2D->3D), Computer Graphics (3D->2D) subjects

- IBMR Technology (Imaged Based Modeling and Rendering)

- Jump the middle stage of

Image->Object->Image- Discrete viewpoint sampling

- Modeling the scene based on sampling images

- Resampling at any viewpoint

- Jump the middle stage of

- IBMR - Panorama

- Steps

- Take Images and pre-process

- Mapping to the cylinder coordinate

- Image registration based on image features

- Image blending

- Project the image to a cylinder object

- Generate the image according to the viewpoint

- Commercial Tools

- OpenCV, Panorama Tools, Hugin

- Krpano, UtoVR

- Steps

- Other IBMR (Support humans wandering in the scene)

- Concentric Mosaics

- Use several camera to capture images at one position

- Light Field

- Use camera array to capture images

- Volumetric Video

- Concentric Mosaics

Human-machine Interfaces

The human-machine interfaces include sensing interfaces (how computing machine show the virtual content to humans), and tracking interfaces (how humans provides the input to computing machine).

- Sensing interfaces (Output Interface)

- Visual

- Helmet

- Glasses

- 180 big Screen

- Cave

- Audio

- Touch

- …

- Visual

- Tracking interfaces (Input Interface)

- User’s Head, Eyes, Hands, Speech, Body

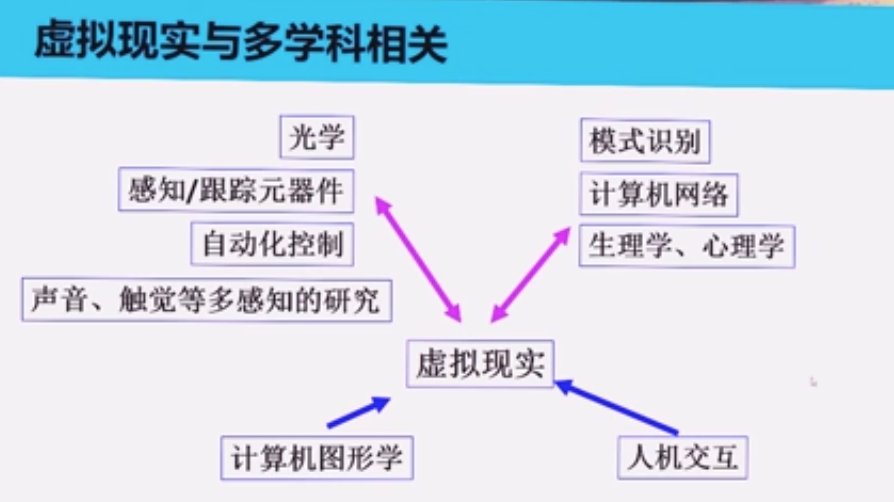

Interaction interfaces is related to lots of subjects. Shown as below.

Visual Sensing Interfaces

Basic categories of visual sensing devices

- Head Mounted

- Hand Held

- Spatial Projection

Some visual sensing devices with immersion

- HMD

- Left eye, right eye screens

- Computing system

- Rotation sensors (gyro)

- Commercial Tools

- Use with phone: Gear VR, Google Cardboard

- Use with PC: Oculus Rift, HTC Vive, PS VR

- Standalone: Oculus Go

- Stereo glasses (3D glasses)

- Left eye, right eye filters

- Categories

- Time-sharing display

- Color difference stereo display

- Polarized stereo display

- Big Stereo Screen (needs to work with stereo glasses together)

- Fuse the overlapped area of several projectors

- Big LED screen

- Sphere screen

- Cave

- Auto stereo display, holographic

- No need to wear devices and no tracking devices required

- Autostereoscopic display

- Volumetric 3D display

- Integral Imaging 3D display

- Hologram

Other Sensing Interfaces

- Hearing sensing interface

- Virtual surround sound technology

- Tactile sensing interface

Tracking interfaces

- Pose tracking interfaces

- Skin/muscle: MYO Wristband

- Optical

- Based on stereo vision principle. Put several camera to capture the human with trackers

- IMU

- Voice recognition and interaction

- Brain-computer interface

Computer Graphics Subject

How to use computer to calculate, process and show the shapes. How to convert 2D/3D object to 2D grid format showing in computer screen. The research content includes in following parts.

Modeling

express the objects with math model

- Polygon, Parametric method

- Point-based modeling: sample points in the surface

- Volume data: sample points in the 3d space

Rendering

Graphics Pipeline. determine the color of each pixel according to the model, light, shader, texture and etc.

- Graphics Pipeline

- Pixel by pixel drawing

- Cast a line from each pixel to the object

- Piece by piece drawing (surface piece)

- Project the piece from surface of object to the screen

Vertex Operations -> Rasterization -> Fragment Operations -> Frame Buffer- Vertex Operation: vertex transformation

- Rasterization: convert shape to 2D grid image

- Fragment operations: calculate the color of each pixel

- Frame Buffer: display cache

- Pixel by pixel drawing

- Graphics System Development Library - Software

- Provide interfaces for operate the graphics card driver, provide the graphics pipeline for rendering

- OpenGL

- Cross-platform

- Direct3D

- Good at game

- GPU - Hardware

- Parallel calculation, faster

- Allow edited shader in the graphics pipeline

Vertex Shader -> Geometry Shader -> Rasterization -> Fragment Shader -> Frame Buffer- HLSL - for D3D shader language

- GLSL - for OpenGL shader language

Basic Rendering Technology

To render an object to the screen, it is required to calculate the following effects on the object

- Lighting effect

- Direct lighting

- Diffuse reflection

- Specular reflection

- Ambient reflection

- Indirect lighting

- Ray-tracing

- Radiosity

- Photon mapping

- Direct lighting

- Texture effect

- Map a texture function to the surface of object to influence the parameters of the diffuse lighting

- Can influence color, bump, reflect, transparent

- Methods

- Bumping mapping

- Displacement mapping

- Shadow effect

- Based on test light: shot raycast from point to light origin

- Shadow volume: project the shadow object’s boundary to the viewing area

- Shadow mapping: move the view point to the light origin, generate a texture containing the depth info, then move back, generate the final image according to the texture

- Environment mapping

- Object with a flat surface will reflect the environment in the surface

- Pipeline

- Capture the environmental images with a central camera

- Map to the surface of a sphere

- Adjust the object’s texture according to the sphere

- Baking

- Put the object into lighting environment, calculate the appearance and store the info into texture. Next time just put the texture to object

Accelerated rendering technology

- LOD (Level of Detail)

- Set different levels of the model according to the viewing distance. Farther, Rougher

- Billboard

- Use a 2D plane instead of 3D objects. But the plane always faces to human

- Scene culling

- Don’t generate objects that are invisible

- Requires scene management

- Scene Graph

- Form the objects in tree data structure according to the logic of the objects

- Use for dynamic scene

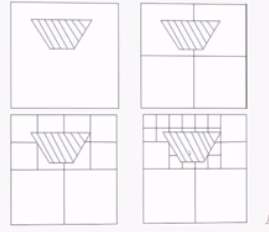

- Space division

- Form the objects in tree data structure according to the location of the objects

- Use for static scene

- Scene Graph

Space division example - Quadtree

Collision detection

- Divide in time domain

- Static, discrete dynamics, continuous dynamic

- Divide in space domain

- Based on image space

- Based on geometric space

- Based on hierarchical bounding box

- Use big bounding box to filter invalid collision

- Based on hierarchical bounding box

AR / MR

AR/MR is fuse the virtual environment with the real environment. It brings computing into real world, letting humans interact with digital objects and information in the environment.

Elements of immersion

- Placing: place a virtual object in the real world position and it always stick to that position

- Scale: the scale of the virtual object changes when you move nearer or faster to it

- Occlusion: a virtual object should be blocked if another virtual object or a real object is between it and human’s view point

- Lighting: a virtual object should shows different effects when the environmental light changes

- Solid: the virtual object shouldn’t overlap with other objects or float in the air

- Context awareness: knows every single real object in the environment and have proper relationship with those objects.

Tracking in AR

Motion tracking of devices

Motion tracking in AR requires lots of hardware module.

- Motion Tracking

- Accelerometer

- Gyroscope

- Camera

- Location-based AR

- Magnetometer

- GPS

There are two main categories methods for AR motion tracking.

| ~ | Outside-in tracking | Inside-out tracking |

|---|---|---|

| Definition | AR device uses external cameras or sensors fixed in the environment to detect motion and tracking positioning | AR device uses internal cameras or sensors to detect motion and tracking positioning |

| Pros | More accurate | More portable |

| Cons | Less portable | Heavier, Heater, More energy consume |

| Product | HTC Vive (VR) | Microsoft HoloLens, Google ARCore enabled phones |

The inside-out tracking method is related to the SLAM technology. Concurrent odometry and mapping (COM) is the motion tracking process for ARCore, which tracks the smartphone’s location in relation to its surrounding world.

Environmental Understanding

Environmental understanding is the process AR device recognize objects in real environment and uses that information to properly place and orient digital objects. The AR devices will detect the features points of the environmental images from the camera and cluster those points and find a plane.

Anchors point is a specific position the objects sticks to after a user has placed them. Anchors allow the underlying system to correct that error by indicating which points are important.

Commercial Products

AR/MR include hand-held AR experience and standalone AR experience. Below is some commercial products of AR.

| Hardware | OS | Software Framework | Company |

|---|---|---|---|

| Mobile Phone | Android | AR Core | |

| Mobile Phone | IOS | AR Kit | Apple |

| HoloLens | Windows | Windows Holographic | Microsoft |

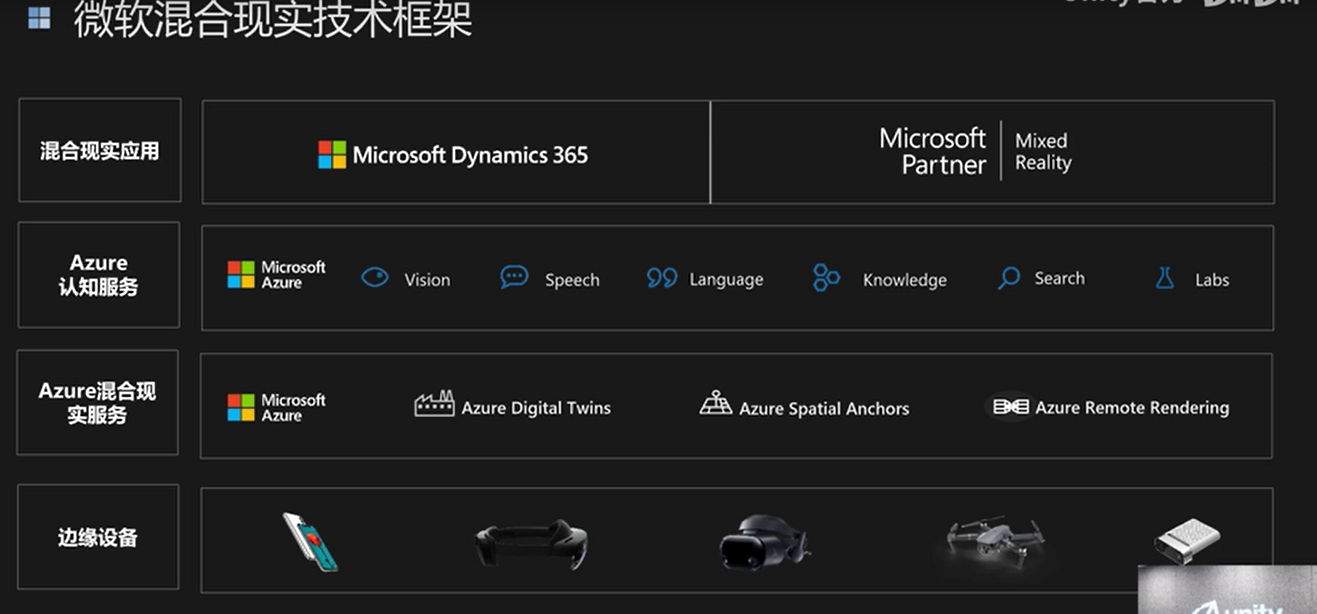

Below is the structure of Microsoft Mixed Reality

Constraints

- Lack standard and proper user interface design principle

- Hardware & Devices

- Size

- Power

- Heat

- Current 3D design pipeline need to be integrated

- Computer vision limitations

- Occlusion

Relative Subjects

Optical Imaging

- Lens based on raster

- Lens based on waveguide

Rendering with real environment

SLAM

SLAM is the technology to recognize the environment and localize the moving object in the environment. It allows the AR device to know where it is and the relative pose of the environment, then it could calculate what kind of imaging to show in the screen.

Based on SLAM used for robots, the SLAM technology used in AR/MR focused more on the accuracy in a local environment.

See more about SLAM in here

Interaction

Hands recognition.