Microsoft Mixed Reality Development

There are a lot of application development paths for Microsoft MixedReality platforms. This post covers things including OpenXR, Unity, Stereo Kit, MRTK and etc.

Overview

Target Devices

- HoloLens only supports UWP applications

- Windows MR Headsets support UWP and Win32 applications

WinRT and OpenXR APIs

Same things

- Provide applications and middleware(game engine) the access to the MR features built in Windows OS

- Depends on different runtimes (converting OS data into API data) baked in Windows OS

- Required Windows SDK to call APIs

- Support both C++/WinRT or C# interfaces.

Different things

| ~ | WinRT APIs | OpenXR APIs |

|---|---|---|

| Who defined | Microsoft | Khronos Group |

| Status | Legacy | Serve as industrial standards |

| OS runtime requirement | Windows Runtime | OpenXR Runtime |

| Documentations | Windows.Perception.* namespace | Xr… |

Runtime converts OS lower layer data into API layer data, both Windows Runtime and OpenXR Runtime are baked in Windows OS.

WinRT API

WinRT API, or called Windows Runtime API, is a set of APIs distributed by Windows SDK, which allows applications to get access to runtime and OS data.

Specifically, the APIs in the following namespace are those APIs serve for Mixed Reality usages.

| Namespace | Functions | Main classes |

|---|---|---|

| Windows.Perception.People | Classes that describe user | - EyePose - HandPose - HeadPose |

| Windows.Perception.Spatial | Classes to reason about spatial relationships within the user’s surroundings | - SpatialAnchor - SpatialStationaryReferenceOfFrame |

| Windows.Perception.Spatial.Preview | Classes to track spatial nodes, allowing user to reason about places and things in their surroundings | CreateLocatorForNode |

| Windows.Perception.Spatial.Surface | Classes to describe the surfaces observed in the user’s surroundings and their triangle meshes | ~ |

| Windows.UI.Input.Spatial | Classes let apps react to gaze, hand gestures, motion controllers and voice | - SpatialPointerPose::TryGetAtTimestamp - SpatialInteractionManager::GetForCurrentView |

See this post for more introduction about HoloLens features and related APIs.

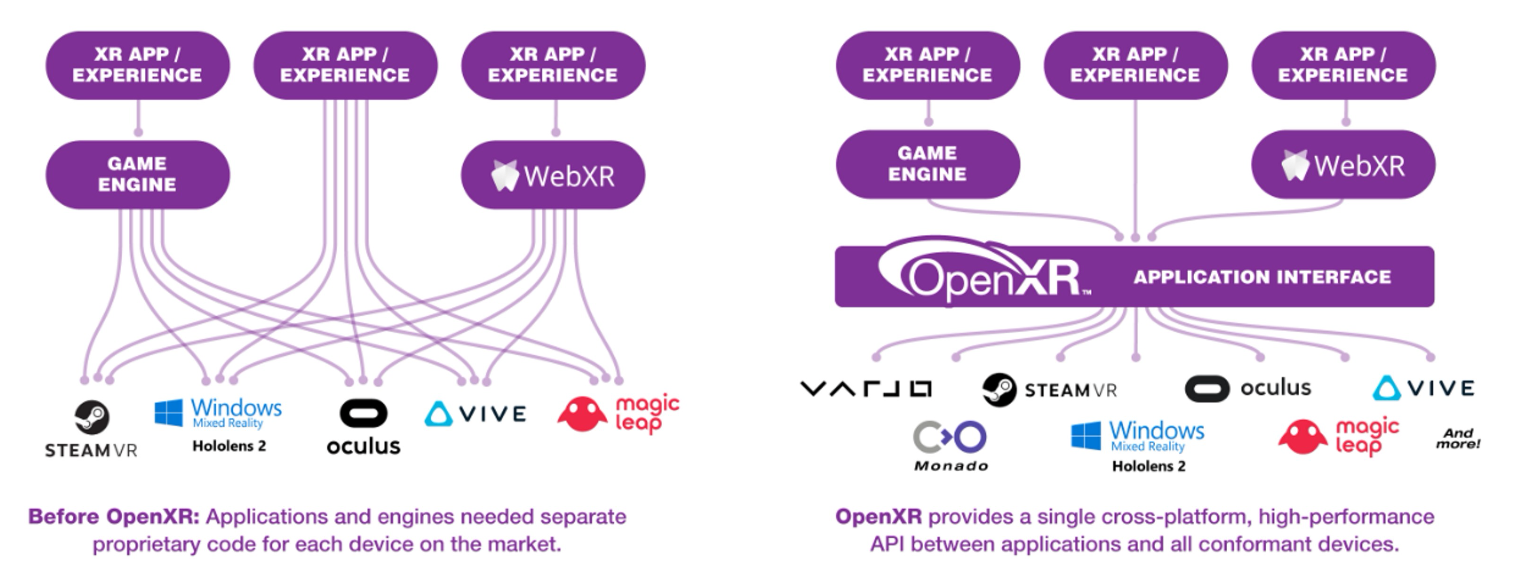

OpenXR API

OpenXR could provide a generic interface for application, or engines to develop on HoloLens. APIs of HoloLens were wrapped by OpenXR Runtime, and provided as several extensions forms

- OpenXR core API: systems, sessions, reference spaces

- OpenXR KHR (official) extension: Direct3D 11/12 integration

- OpenXR EXT (pre-official) extension: hand articulation, eye gaze

- OpenXR MSFT (vendor) extension: Unbounded reference space, spatial anchors, hand interaction, scene understanding, other UWP CoreWindow API, other legacy WinRT APIs

Some resources

Naive Development

Naive development means developing the application directly with WinRT APIs or OpenXR APIs, without using any middleware like game engines. This allows developers to get more access to the raw data of HoloLens and build their own middleware or framework.

Both WinRT APIs and OpenXR APIs support to build UWP or Win32 types of applications, and they both support to use C++ (C++/WinRT for WinRT APIs) or C# to development.

Samples using WinRT API (Legacy)

UWP sample based on WinRT (using C++/CX, C++/WinRT, C#)

Win32 sample based on WinRT (using C++/WinRT)

Since the difference of UWP and Win32 applications are only the way of wrapping up and the source code is mostly same, this section will mainly use the UWP application sample written in C++/WinRT as reference.

Basic Hologram

- Project link: https://github.com/microsoft/Windows-universal-samples/tree/main/Samples/BasicHologram

- Project type: Holographic DirectX 11 App (Universal Windows) (C++/WinRT) template in Visual Studio

Main Working Procedure

- Start:

wWinMainfunction inAppView.cpp.- Create a

IFrameworkView- Create

ApplicationView - Create

CoreWindow - Create

m_holographicSpaceforCoreWindow

- Create

- Starts the

CoreApplication.

- Create a

- Update:

UpdateandRenderfunctions inAppMain.cpp.- Update

- Create a new

holographicFramefromm_holographicSpace - Get prediction of where holographic cameras will be when this frame is presented by

holographicFrame.CurrentPrediction() - Recreate resource views and depth buffers as needed

- Get coordinate system to use as basis for rendering

- Process gaze and gesture input

- Process time-based updates in

StepTimerclass. e.g. position and rotate holograms - Update constant buffer data

- Returns the

holographicFrame

- Create a new

- Use

Renderfunction which takes theholographicFrameto render the current frame to each holographic camera, according to the current app and spatial positioning state

- Update

Classes in Windows.Perception.* namespace

| Name | Description |

|---|---|

| HolographicSpace | Portal into the holographic world. - control immersive rendering - receives holographic camera data - provides access to spatial reasoning APIs |

| SpatialLocator | Represents the MR device, tracks its motion, and provides coordinate system understood by its location - create stationary frame of reference with the origin placed at the device’s position when the app is launched |

| SpatialAnchor | - provides coordinate systems, with or without easing adjustments applied - could be stored using SpatialAnchorStore class |

| SpatialSurfaceObserver | provides information about surfaces in application-specified regions of space near the user, in the form of SpatialSurfaceInfo objects. |

Spatial Mapping Sample

- Project link: https://github.com/microsoft/Windows-universal-samples/tree/master/Samples/HolographicSpatialMapping

- Set up a

SpatialSurfaceObserver- Ensure have the device’s permission

- Provide spatial volumes (e.g. cube or sphere) to define the regions of interest where the app wants to receive spatial mapping data, using

SetBoundingVolumesmethod- volume with world-locked spatial coordinate system: for fixed region of the physical world

- volume with body-locked spatial coordinate system: for region moves with user (but not rotates)

- Register for the

ObservedSurfacesChangedevent

- Retrieve spatial surfaces information

- subscribe the event: for fixed region of physical world

- polling: for dynamic region of physical world

SpatialSurfaceInfodescribes a single extant spatial surface, including a unique ID, bounding volume and time of last change.GetObservedSurfacesof observer returns a map of <GUID, SpatialSurfaceInfo>

- Process asynchronous mesh request

TryComputeLatestMeshAsyncof eachSpatialSurfaceInfoasynchronously return oneSpatialSurfaceMeshobject, which contains severalSpatialSurfaceMeshBufferdata to represents the triangle mesh vertex parameters- hole filling, hallucination removal, smoothing, plane finding for meshes data

Classes in Windows.Perception.* namespace

| Class | Functions |

|---|---|

| SpatialSurfaceObserver | provides information about surfaces in application-specified regions of space near the user, in the form of SpatialSurfaceInfo objects. |

| SpatialSurfaceInfo | describes a single extant spatial surface, including a unique ID, bounding volume and time of last change. It will provide a SpatialSurfaceMesh asynchronously upon request. |

| SpatialSurfaceMeshOptions | contains parameters used to customize the SpatialSurfaceMesh objects requested from SpatialSurfaceInfo. |

| SpatialSurfaceMesh | represents the mesh data for a single spatial surface. The data for vertex positions, vertex normals and triangle indices is contained in member SpatialSurfaceMeshBuffer objects. |

| SpatialSurfaceMeshBuffer | wraps a single type of mesh data. |

| Main Working Procedure |

Samples using OpenXR API

Procedure of sample using OpenXR API.

- Application started, decide API and extensions usages

- Create instance

- Create session, and main logics happen here

- Destroyed session, instance, and close application

More info about the working procedure could be found here

Game Engine

Game engines are mainly used to development games, but they are also very suitable to develop Mixed Reality applications.

Unity3d

Unity3d has the built-in XR plugins to build an application for different platforms and devices. There is also a Windows XR plugin which calls the WinRT API. Both of them are deprecated or in legacy.

Staring from version 2020.3 LTS, Unity3d has enabled the Unity OpenXR plugin for Mixed Reality development, and starting in Unity 2021.2, Unity OpenXR plugin will be the only supported Unity backend for targeting HoloLens 2 and Windows MR headsets. Unity OpenXR plugin only provides the access to those OpenXR core features defined in the standards. To fully get access to features of HoloLens and WinMR, the Mixed Reality OpenXR plugin is required, which calls the MSFT-vendor parts of OpenXR APIs.

The Unity C# APIs provided by the 2 OpenXR plugins are as follows.

Unity has the MRTK to help develop applications.

Unreal

Staring from version 4.26, Unreal supports OpenXR APIs to develop for HoloLens and Mixed Reality headsets.

Unreal also has the MRTK to help develop applications. The MRTK-Unreal 0.12 supports OpenXR projects.

MRTK

MRTK, Mixed Reality Toolkit, is a Microsoft-driven open source project that provides a set of components and features, used to accelerate cross-platform MR app development in Unity and Unreal.

Starting version 2.7, the Unity-MRTK supports OpenXR plugin. The current structure of Unity is as follows.

Library

StereoKit

StereoKit is a library that is built on top of OpenXR APIs. It is a wrapper of OpenXR APIs and provides easy-to-use C# interfaces for developers. Developers could use NuGet package to install the library.

Compared to developing on native OpenXR APIs, StereoKit encapsules those low-level APIs. While compared to engines, StereoKit is more lightweight, more focused on XR development, and provide only-code based development.

App Cycle

This section takes the Holographic D3D 11 UWP Application template (C++/WinRT) as an example, to show what is the app cycle for an Mixed Reality app rendering a stable spinning cube in space.

General procedure

A Holographic template application is like a Direct3D application. The main logics is to iterate following actions for each frame’s rendering.

- Maintain the holograms’ pose, appearance

- Retrieve the stereo rendering cameras (for HoloLens, they refer to left and right display) poses

- Set the holograms’s pose and stereo rendering cameras’s view and projection matrices to rendering graphics pipeline.

App structure

Main loop

1 | // AppView.cpp |

AppMain logics

1 | // HolographicTemplateAppMain.cpp |

Holograms logics

1 | // SpinningCubeRenderer.cpp |

CameraResource logics

1 | // CameraResources.cpp |

Related WinRT API Classes

1 | // Namespace: Windows.Graphics.Holographic |

Reference

- MS Mixed Reality Docs, Core Concepts, Coordinate System

- MS Mixed Reality Docs, Core Concepts, Spatial anchors

- MS Mixed Reality Docs, Core Concepts, Scene understanding

- MS Mixed Reality Docs, Core Concepts, Spatial Mapping

- MS Mixed Reality Docs, Core Building Blocks, Spatial mapping in DirectX

- OpenXR Home Page

- OpenXR Specification

- OpenXR 1.0 Reference Guide

- Youtube video, Updates on OpenXR for Mixed Reality